In autonomous mode, the demonstrated robot can transport objects in the factory. High payload capacity allows the robot to transfer even heavy material or product. Telepresence operation mode is also provided to allow remote inspection of production technologies. It allows inspection and in same cases also object manipulation done by remote operators using immersive telepresence. The expected benefit from its application is reduction of maintenance costs when operating remote highly/fully automated production facilities.

Research focus

| Object Detection |

| Localization Module |

| Global Pose Estimation Module |

| Trajectory Planning |

| Mobile Robot Path Planning |

Target

| Companies operating remote branches or technology providers providing support/maintenance for their technologies in remote areas. |

Demonstrator Description

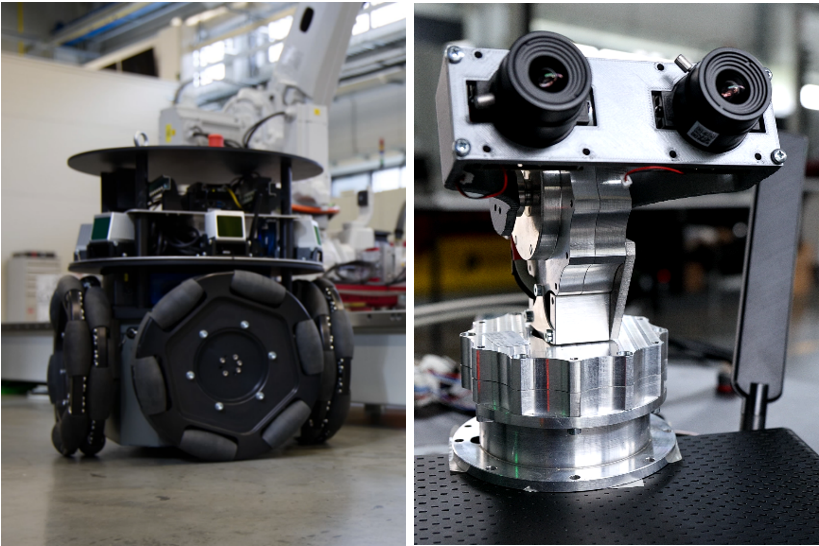

Set of three self-developed mobile robots with omnidirectional drive configuration.

The robots are equipped with a series of 3D lidars, RGB cameras, computing modules, communication modules and advanced telepresence optoelectronics system.

The robots can operate in two main modes – autonomous and teleoperated with visual telepresence.

In autonomous mode, the robots will be integrated into the factory control system and will be able to interact with it. The robots know their exact position and orientation, know where the other robots are, and can recognize obstacles and avoid them. They are able to move fully autonomously based on commands from superior control system.

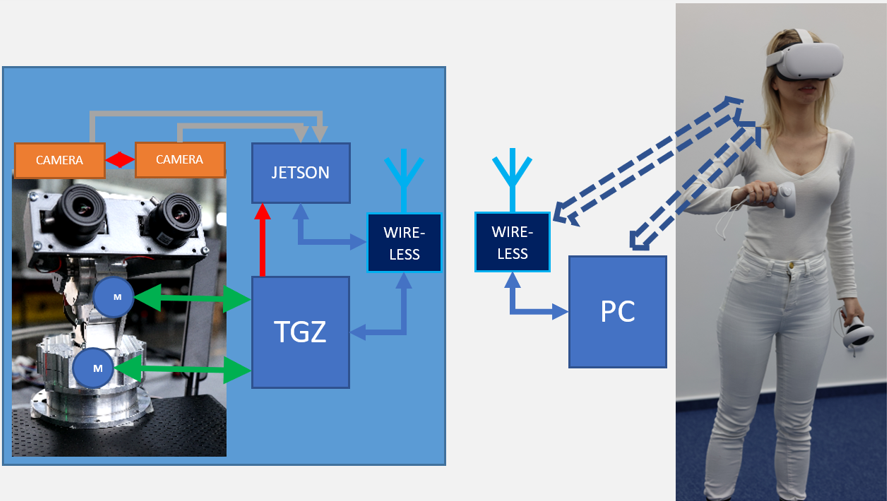

In teleoperated mode, stereovision camera manipulator is used to acquire visual information from the surrounding of the robot. The data are than wirelessly transferred to “the factory”, and can be passed anywhere else through Internet. The image can be displayed either on classical 2D monitor, but also to 3D displaying devices, including head mounted displays, like Meta Quest Pro, etc.

Two main approaches are examined – direct transfer to the head mounted display (Meta Quest Pro) and PC-based operators station, which is much more powerful, but lacks mobility and possibility to use enhanced reality.

Hardware

Custom built omnidirectional mobile robot ODIN – cooperation between Brno University of Technology and TG Drives company, Czech Republic.

| PARAMETER | VALUE |

| Payload capacity | 300 kg |

| Wheel diameter | 320 mm |

| Maximum speed | 1,5 m/s |

| Platform dimensions | 700x700x1000 mm |

| Operation time | 4 hours |

- Livox MID-70 lidar – 6pc

- RGB industrial cameras – 6pc

- Intel NUC – control computer

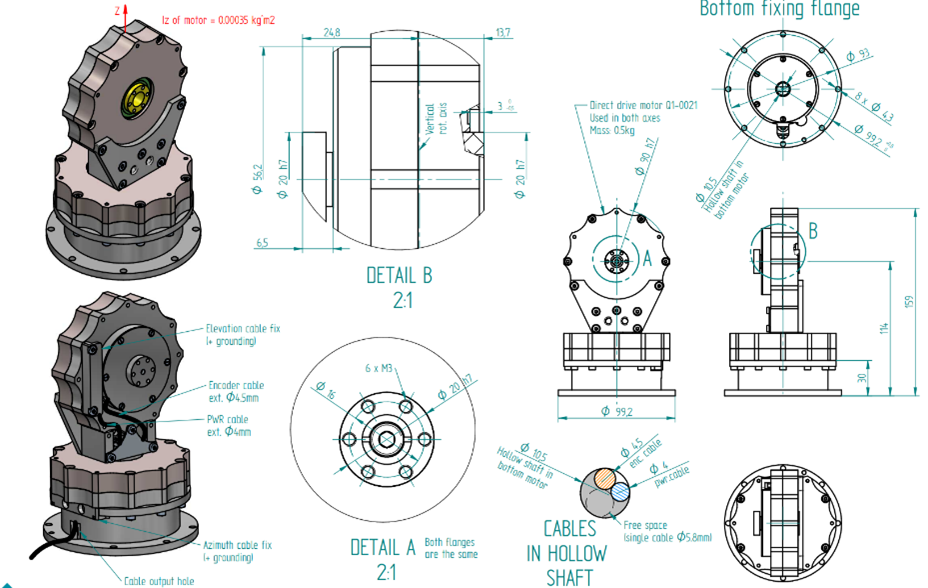

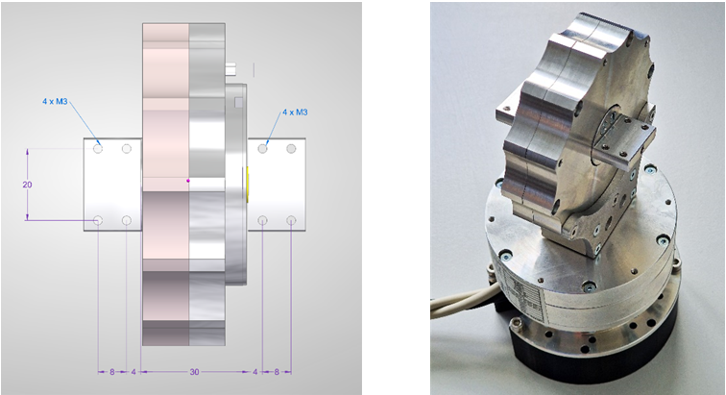

- Custom built 2DOF camera manipulators for telepresence vision system

- Fast AC motors without gearboxes

- Custom motor drivers based on TGZ

Software Framework

Autonomous mode – ROS 2.0, C++, Python

Telepresence – depending on the HW architecture:

- C++ Android studio (for Meta Quest HMD), Linux and C++ on nVidia Jetson

- C#, Visual Studio, Windows on PC, Linux and C++ on nVidia Jetson

Communication Platform

All communication communications based on ethernet – either UDP or TCP/IP.

Command communication in ROS is based on UDP, DDS framework is used in our case.

In telepresence mode – communication between operator station and robot is based on UDP commands, video transfer is currently done by RTSP over UDP (H264 codec) – but other possibilities are examined.

Since the robots are mobile, the communication is wireless. We can use and will test WiFi 6, mobile 5G (SA, NSA) and MANET networks. Brno University of Technology Testbed is equipped with appropriate communication infrastructure that is connected to the control system of the factory (Siemens PLC).

Scenario Description (Applications)

Autonomous mode – object transfer in the factory. High payload capacity allows the robot to transfer even heavy material or product can be transferred. Since we have three platforms, that will be in the future equipped with the same equipment, we can develop and test applications for simultaneous operation of more robots on one site.

Telepresence mode – inspection of remote factory: Is the 3D print going well? Why the machine is not working? Technological accident inspection. Expert help with problem. Personal meetings in the factory.

List of Used Modules and Realized Applications

| Module Name | Description | Application |

| Object Detection | Based on YOLOV5, the video stream of an RGB camera is analysed and the detected objects are classified. | Local Collaborative Assembly Remote Collaborative Assembly |

| Localization module | EKF-based pose estimation, based on odometry, AMCL, and Vicon | Robot localization |

| Global pose estimation module | AMCL (adaptive Monte-Carlo Localization) based global localization module based on 2D Lidar scans and known map of the surrounding | Robot localization |

| Trajectory planning | DWB – Dynamic Window Approach – kinematically and perception-constrained local trajectory generation. | Omnidirectional mobile robot trajectory planning. |

| Mobile robot path planning | DIJKSTRA algorithm | Global path planning in the factory |